Load Testing Anomalies

Understanding Load Testing Anomalies

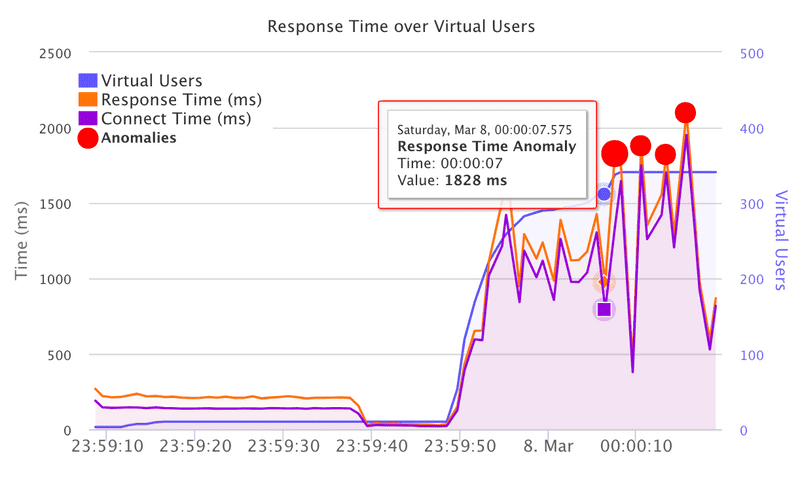

During your load tests on LoadFocus, you may notice red dots or markers on the charts indicating sudden spikes in response time. These markers are anomalies—statistically significant deviations in your load test data that merit closer attention.

What These Anomalies Represent

The red dots highlight points where the response time spiked well beyond the normal range observed in your data. The anomaly detection algorithm typically uses a standard deviation approach to identify outliers. Any data point more than 2 standard deviations away from the mean response time is flagged as an anomaly.

Why They’re Important

Potential Breaking Points Anomalies can signal thresholds where your system begins to struggle under increased load. If you see spikes corresponding with higher virtual user counts, it might be an early warning that your infrastructure or application code is nearing its capacity.

Bottlenecks Sudden response time increases can pinpoint resource contention (CPU, memory, or disk), database locks, or cache misses. Identifying these spikes helps you focus your optimization efforts on the most problematic areas.

External Dependencies Third-party services or APIs can also introduce anomalies if they respond slowly or encounter their own performance issues. Tracking anomalies helps you see if these dependencies are contributing to your overall latency.

Memory Issues Garbage collection pauses or memory leaks often show up as periodic response time spikes. If your anomalies occur at regular intervals, it may indicate a memory management issue.

What to Investigate

When anomalies appear, use the following checklist to pinpoint their root cause:

Load Correlation Check if anomalies occur after the load crosses a certain threshold. For example, do you see a spike once you go beyond 500 or 1,000 virtual users?

System Metrics Review CPU, memory, disk I/O, and network usage on your servers at the exact timestamps where anomalies occurred. Look for resource saturation or sudden drops in performance.

Database Performance If your application relies heavily on a database, examine query execution times, locks, or deadlocks at the moment of the spike.

Code Paths Identify which specific API endpoints or functions are involved in the anomalies. This helps isolate whether the issue is limited to certain parts of your code.

External Factors Sometimes anomalies coincide with network issues, deployment events, or third-party API slowdowns. Correlate your load test timeline with any external changes or known incidents.

How to Act on Anomalies

Scaling If anomalies are tied to load thresholds, consider scaling up your infrastructure or optimizing your application code to handle higher concurrency.

Caching and Database Optimization Evaluate whether improved caching strategies or optimized database queries could reduce load on your system and smooth out spikes.

Monitoring and Alerting Set up real-time alerts and monitoring for your production environment so you can catch these anomalies before they impact end users.

Re-Run Tests After making any changes, re-run your load tests to verify whether the anomalies have been addressed or if further investigation is needed.

Conclusion

Load testing anomalies serve as early-warning signals for performance bottlenecks and system instabilities. By paying close attention to these outliers and correlating them with other system metrics, you can proactively identify and fix issues before they escalate into major incidents.