Introduction: The Challenge of Performance Testing Across Tech Stacks

Performance bottlenecks can break user experience – and fixing them often requires deep dives into tech stacks, complex tools, and long hours of scripting. That’s fine if you’re a seasoned engineer, but what if you’re a product owner, agency leader, student, or DevOps lead who just wants to know: How well does my app actually scale?

Good news: AI-powered tools now allow you to benchmark your application’s load test results – customized by tech stack – without writing a single line of code.

Is Your Infrastructure Ready for Global Traffic Spikes?

Unexpected load surges can disrupt your services. With LoadFocus’s cutting-edge Load Testing solutions, simulate real-world traffic from multiple global locations in a single test. Our advanced engine dynamically upscales and downscales virtual users in real time, delivering comprehensive reports that empower you to identify and resolve performance bottlenecks before they affect your users.

This article walks you through exactly how, using tools like LoadFocus, which automate both the testing and the analysis process for you.

What Is AI-Powered Load Testing?

Understanding the Basics

AI-powered load testing is the modern way of measuring how well your application performs under stress – without the manual labor. These tools simulate thousands of users, monitor key metrics like response time and error rate, and use machine learning to surface optimization insights tailored to your stack.

Why It’s a Game-Changer

- No coding required – Ideal for non-technical users and cross-functional teams.

- Stack-aware insights – Recommendations adjust based on the actual technologies you use.

- Time-saving – What took hours before can now be automated in minutes.

- Smart optimization – AI flags bottlenecks and shows exactly where to focus.

Why Your Tech Stack Matters in Load Testing

What’s a Tech Stack, Again?

Your tech stack is the combination of programming languages, frameworks, databases, servers, and cloud providers that make up your application.

Think your website can handle a traffic spike?

Fair enough, but why leave it to chance? Uncover your website’s true limits with LoadFocus’s cloud-based Load Testing for Web Apps, Websites, and APIs. Avoid the risk of costly downtimes and missed opportunities—find out before your users do!

For example:

- Frontend: React, Next.js

- Backend: Node.js

- Database: MongoDB

- Cloud: AWS

Different Stack, Different Problems

Not all stacks break the same way under load. A React app might slow down from poor rendering; a Django app might choke on database queries. AI tools that understand your stack give you relevant, not generic, recommendations.

Step-by-Step: Benchmark Load Test Results Without Coding using LoadFocus

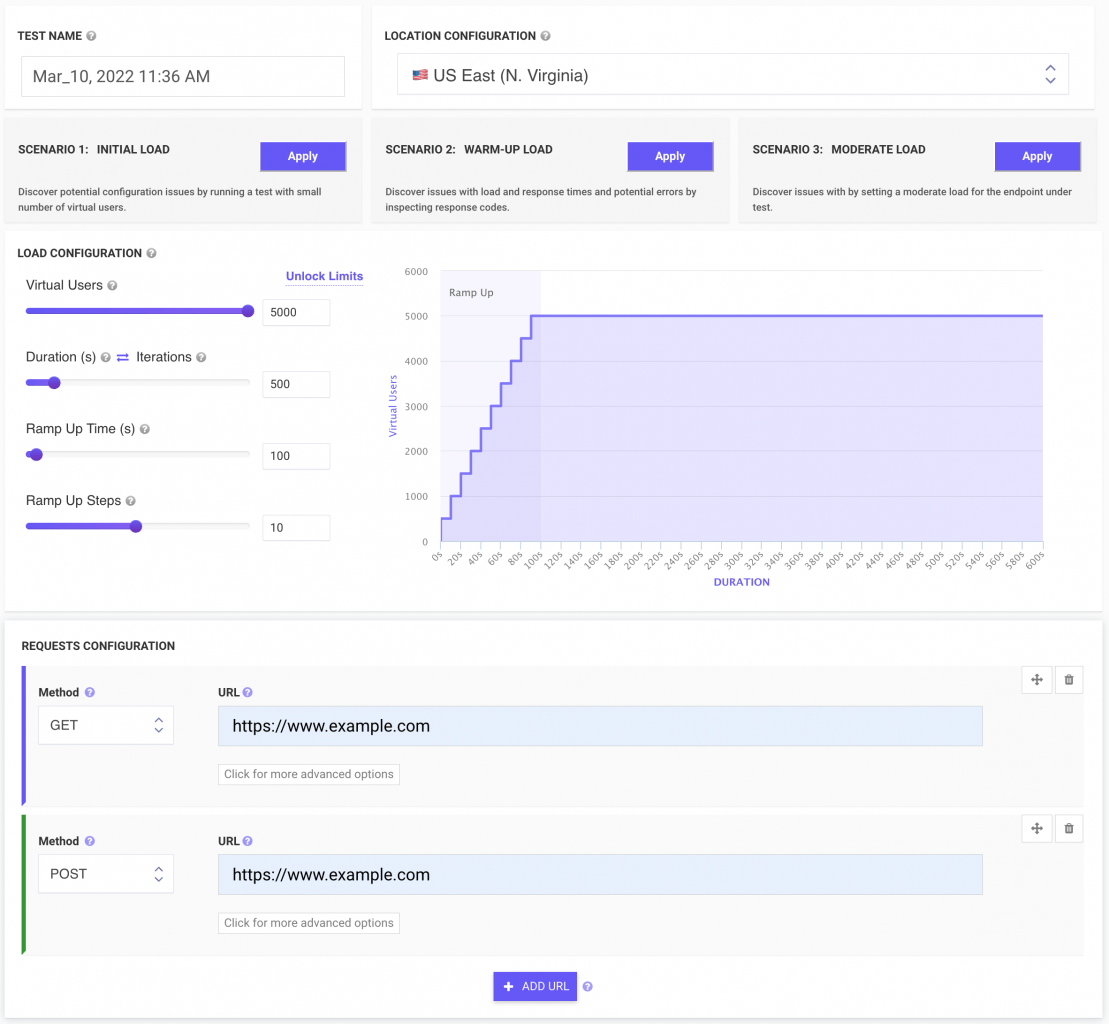

1. Run a Load Test with/without Apache JMeter test script

Using LoadFocus you can run load tests for URLs or using external tools like Apache JMeter.

You can reuse a saved stack or build a new one in seconds.

LoadFocus is an all-in-one Cloud Testing Platform for Websites and APIs for Load Testing, Apache JMeter Load Testing, Page Speed Monitoring and API Monitoring!

2. Run the Test

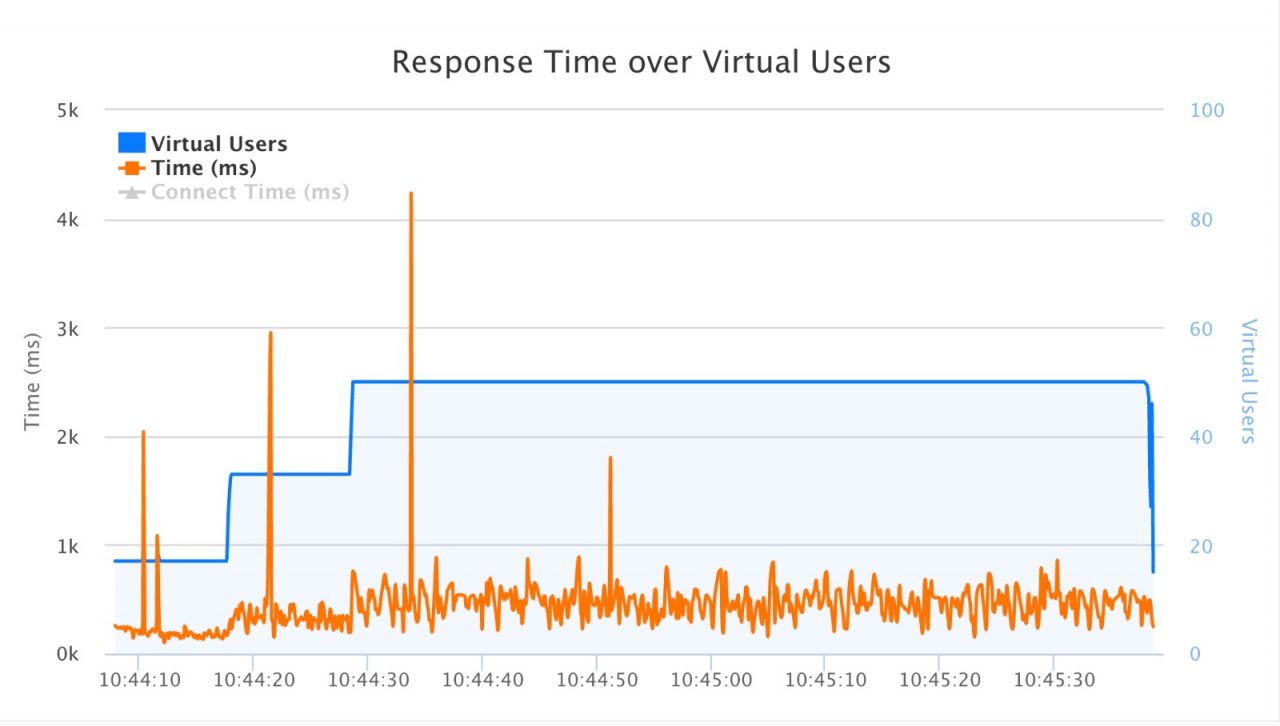

Once your stack is set, run a load test directly in LoadFocus or upload results from JMeter.

The tool will simulate traffic – e.g., 5 virtual users over 60 seconds – and collect response times, error rates, and throughput.

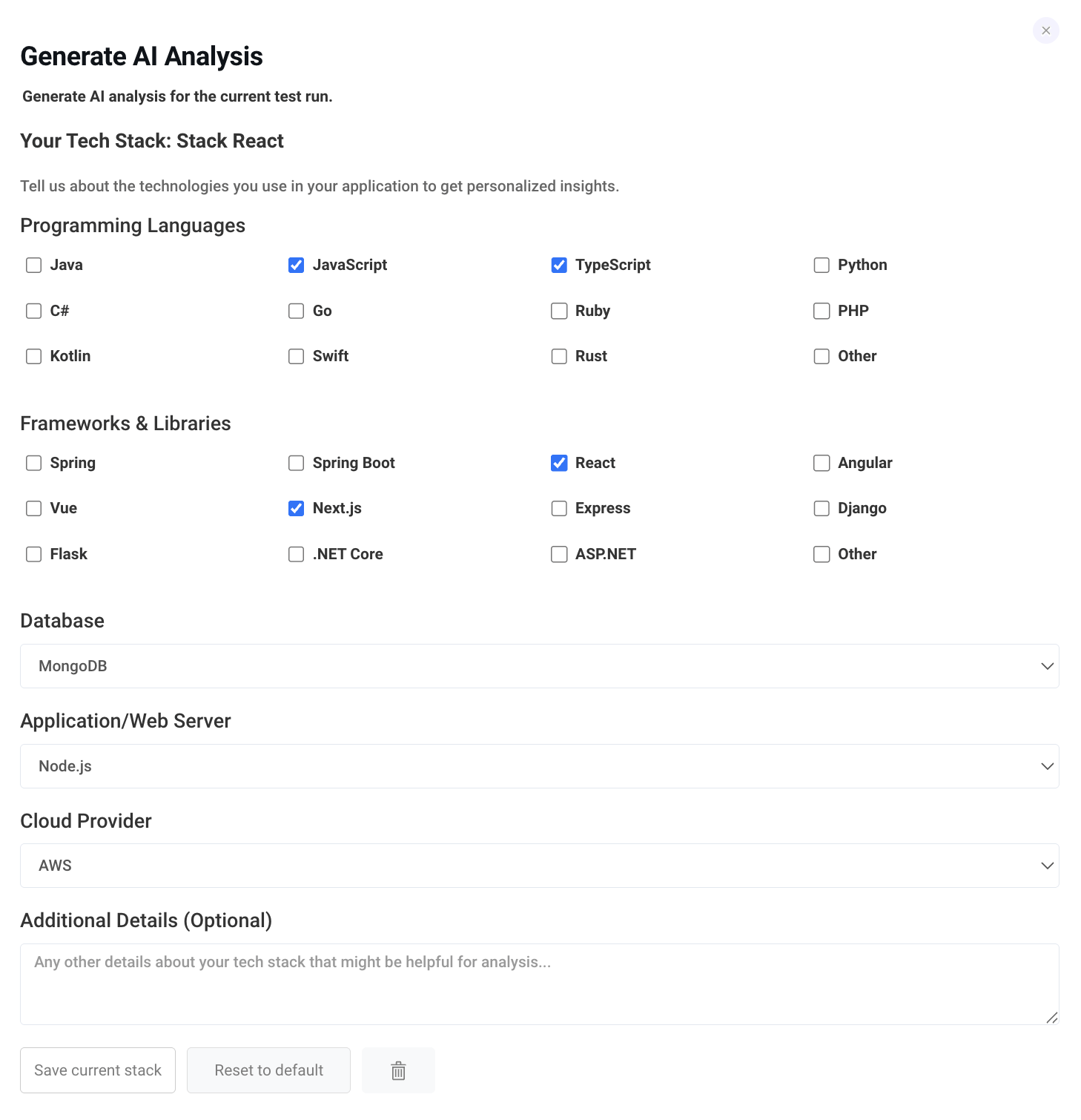

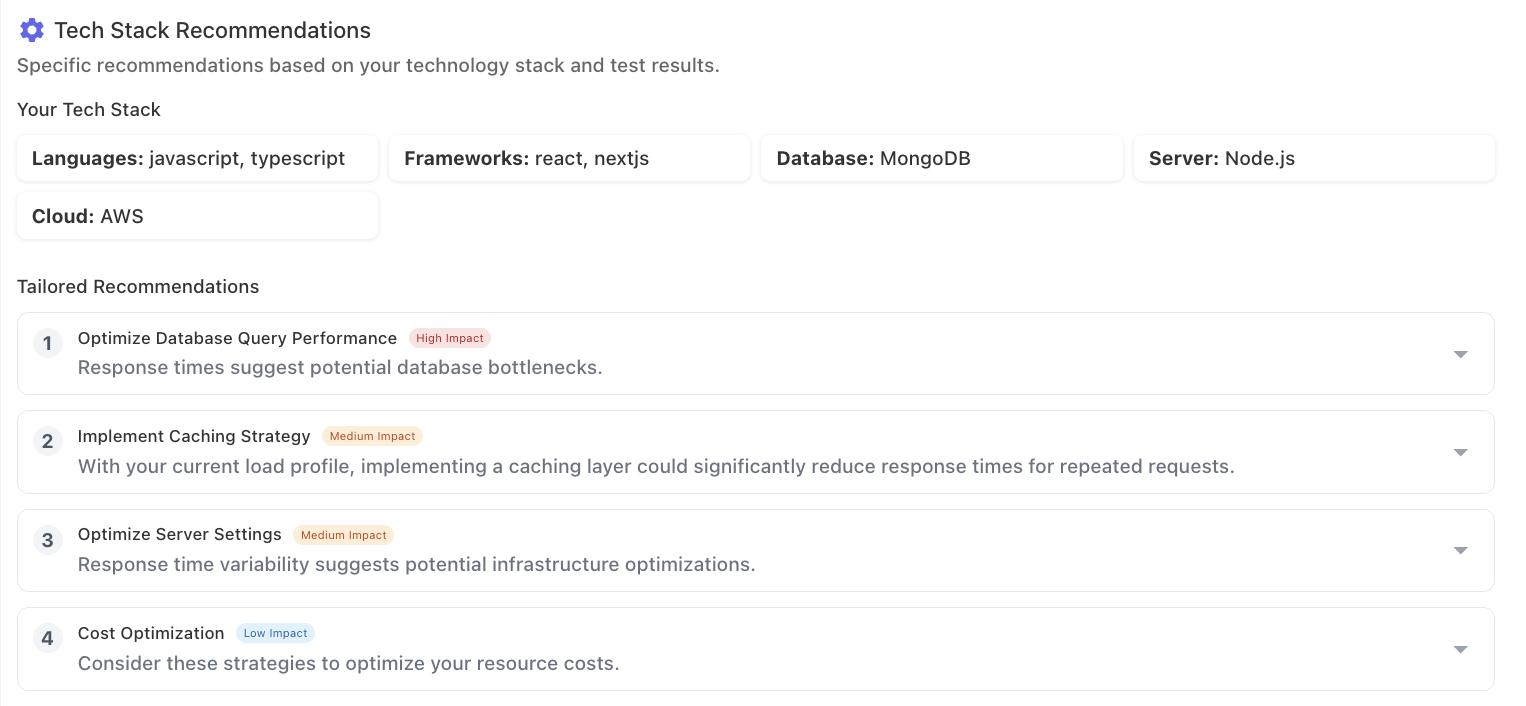

3. Configure or Select Your Tech Stack

LoadFocus lets you define your tech stack by selecting:

- Languages (JavaScript, TypeScript, Python, etc.)

- Frameworks (React, Next.js, Flask, etc.)

- Database (MongoDB, PostgreSQL, etc.)

- Server (Node.js, Tomcat, etc.)

- Cloud Provider (AWS, Azure, etc.)

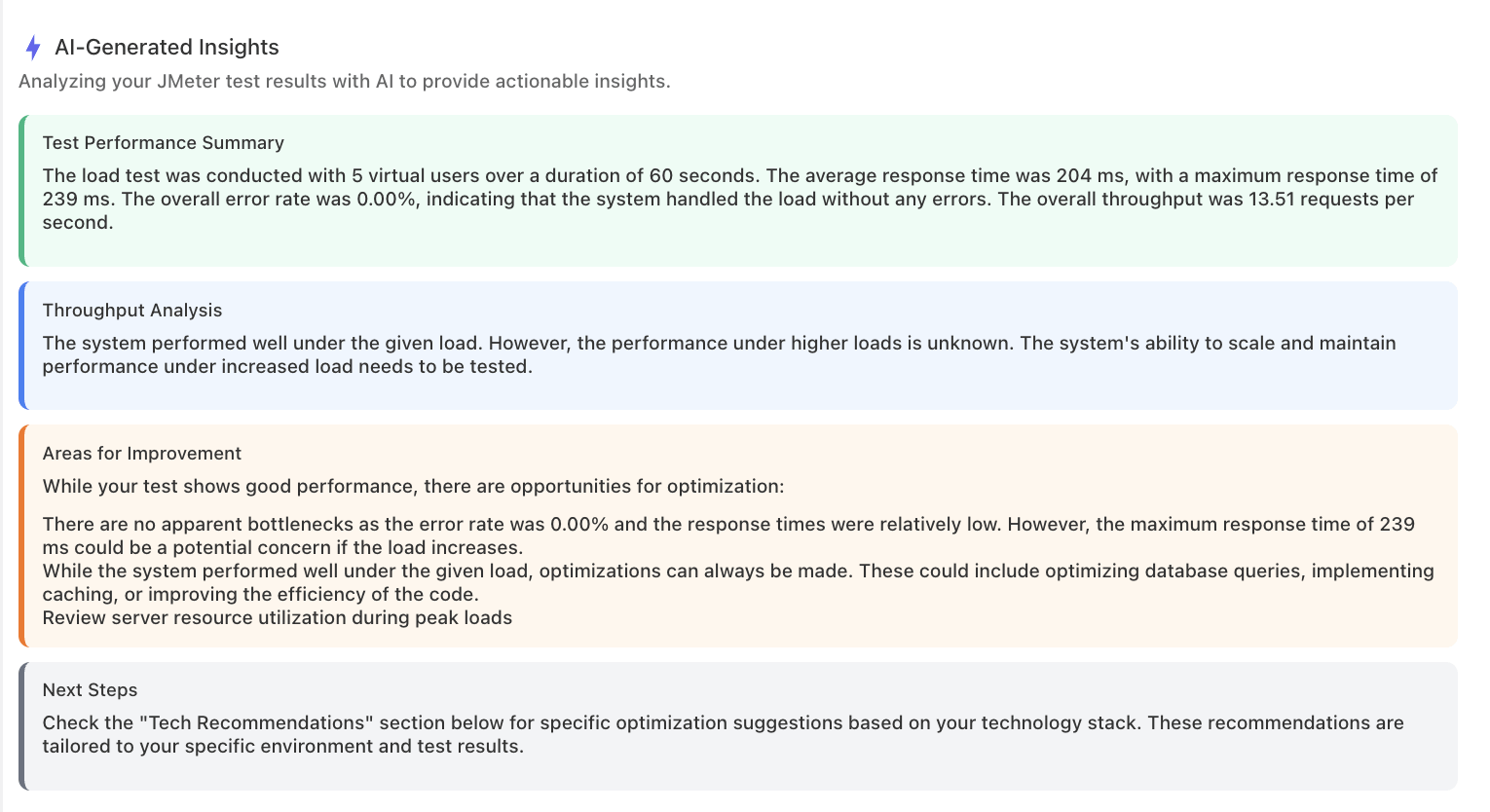

4. Let AI Do the Analysis

This is where the magic happens.

With one click, LoadFocus analyzes your results using AI, layering insights specific to your tech stack. You get:

- Performance Summary – Avg/max response times, error rate, throughput.

- Throughput Insights – How performance holds up under load.

- Bottlenecks – Database, caching, infrastructure problems.

- Recommendations – Ordered by impact: high, medium, low.

New! AI-Assisted Reports You Can Print and Share

Thanks to a recent LoadFocus feature, you can now generate printable PDF reports that include all your AI-assisted insights. This is perfect for stakeholders, team updates, or technical audits.

How to Print AI Analysis in LoadFocus:

- Go to the AI Assist tab in your test run.

- Click New AI Analysis and select your tech stack.

- Once the analysis completes, hit the print icon next to the result.

- Choose Save as PDF to generate a shareable report.

This report includes:

- A timestamped performance overview

- AI-generated insights

- Stack-aware optimization tips

- Tailored next steps

Explore more here: Load Test Result AI Analysis Guide

What Do LoadFocus AI Recommendations Look Like?

Here’s what you might see after a test:

🟥 Optimize Database Query Performance (High Impact)

“MongoDB queries are causing slowdowns under high load. Optimize indexing, and use server-side rendering in Next.js for faster responses.”

🟧 Implement a Caching Strategy (Medium Impact)

“Adding a Redis layer could dramatically reduce repeated load response times as your user base scales.”

🟧 Optimize Server Settings (Medium Impact)

“Configure AWS auto-scaling to maintain responsiveness during peak usage.”

🟩 Cost Optimization (Low Impact)

“Consider serverless options (e.g., AWS Lambda) to cut costs for off-peak loads.”

These aren’t vague best practices – they’re grounded in the actual stack and test data you provide.

A Word on LoadFocus

Whether you’re new to load testing or managing complex CI/CD pipelines, LoadFocus takes the heavy lifting out of performance optimization. Its AI-powered load testing lets you:

- Run and analyze tests without code

- Customize analysis to your tech stack

- Generate PDF reports instantly

- View impact-ranked optimization recommendations

It’s a no-brainer for software teams, agencies, and DevOps looking for real results – fast.

FAQ: Everything You Wanted to Know About AI and Load Testing

What is artificial intelligence in software testing?

AI in testing automates test case creation, execution, and result analysis using machine learning to improve accuracy and reduce manual work.

What is a tech stack in testing?

A tech stack is the combination of all technologies (languages, frameworks, databases, etc.) used in your app – critical to tailoring performance tests effectively.

What technology is used in performance testing tools?

Technologies include traffic generators (e.g., JMeter), monitoring systems, analytics platforms, and increasingly – AI for automated insights.

What does my tech stack include?

It includes frontend/backend languages, frameworks, databases, web servers, cloud providers, and third-party services powering your app.

Does performance testing need coding?

Not necessarily. Tools like LoadFocus offer no-code options, though scripting may be needed for advanced, custom scenarios in some tools.

Does JMeter require coding?

JMeter has a GUI for most tasks but supports coding in Java, Groovy, or BeanShell for advanced logic or plugin development.

What is the future of performance testing?

More AI, more automation, tighter CI/CD integration, and broader accessibility to non-engineers.

Is there any AI tool for testing?

Yes – LoadFocus, and others use AI to automate testing workflows, analyze results, and recommend fixes.

How to become an AI software tester?

Learn testing fundamentals, get familiar with AI/ML basics, and start using AI testing tools hands-on.

Can AI do QA testing?

AI can automate many QA processes, like regression testing, bug triaging, and exploratory testing pattern recognition.

How to do AI testing?

AI testing involves testing AI models for accuracy, performance, and fairness, and also using AI to test conventional software.

What is IQ in software testing?

Not a standard term—sometimes used to describe the intelligence level of automation/testing frameworks.

Can AI write test cases?

Yes—some tools auto-generate test cases based on app behavior or user journeys.

Is AI testing a good career?

Yes! It combines software testing and AI/ML—two booming fields—with growing demand in enterprise and startup sectors.

Does QA testing have a future?

Absolutely. As software complexity grows, QA is more critical than ever—and automation/AI will only enhance its role.